Artificial intelligence is no longer something happening quietly in massive data centers far away from everyday users. In 2026, AI lives inside smartphones, laptops, cars, security cameras, and even household appliances. This shift has brought a critical question to the surface: should AI live on the device itself, or should it continue relying on the cloud?

The debate around on-device AI vs cloud AI is no longer theoretical. It directly affects AI privacy expectations, real-world performance, and how much companies pay to operate AI at scale. Users want instant responses without feeling watched. Regulators want stronger AI security and privacy guarantees. Businesses want innovation without unpredictable cloud bills.

Cloud AI made artificial intelligence accessible to the world, but edge AI and edge computing and AI are changing where intelligence actually belongs. Add hybrid edge cloud AI, private cloud compute, and private AI compute into the equation, and the decision becomes less about technology trends and more about long-term strategy.

This article breaks down on-device AI vs cloud AI through the lenses of privacy, speed, and cost, helping businesses and tech-savvy readers understand what really matters as AI trends 2026 continue to evolve.

What cloud AI really is

Cloud AI refers to artificial intelligence systems that rely on centralized servers to process data, run models, and deliver results back to users. Instead of performing AI tasks locally, devices collect information and send it to powerful cloud infrastructure where the computation happens.

This approach became dominant because early AI workloads demanded far more computing power than most devices could provide. Training and running advanced models required specialized hardware, large memory pools, and experienced engineering teams. Cloud platforms solved these challenges by offering scalable AI capabilities as a service.

By centralizing compute resources, cloud AI allowed organizations to:

- Access high-performance computing without owning hardware

- Scale AI workloads up or down based on demand

- Deploy updates and model improvements from a single location

- Reduce time-to-market for AI-powered products

Cloud AI was especially attractive to startups and fast-growing companies. Instead of investing heavily in infrastructure, teams could focus on building products and experimenting with AI features quickly. Enterprises also benefited from predictable scaling and centralized management across regions.

Another major advantage was data aggregation. Cloud AI thrives on large datasets, and centralized systems made it easier to collect, store, and analyze information from millions of users. This data-driven feedback loop accelerated model improvement and reinforced the early dominance of cloud-based AI.

However, as AI expanded into privacy-sensitive and real-time use cases, the limitations of centralized processing around data control, latency, and long-term cost became increasingly apparent.

The privacy problem with cloud AI

As cloud AI became embedded in everyday products and services, concerns around AI privacy began to intensify. The issue is not that cloud AI is inherently insecure, but that its architecture requires data to move away from the user.

Every cloud AI interaction involves transmission. Voice inputs, images, documents, sensor readings, and behavioral data are sent from devices to remote servers for processing. Even with encryption in place, this movement increases exposure and adds complexity.

From a user perspective, this creates uncertainty around where data is stored, how long it is retained, and who can access it. From a business perspective, it introduces responsibility. Organizations become custodians of large volumes of sensitive information, making them attractive targets for breaches and regulatory scrutiny.

Key privacy challenges associated with cloud AI include:

- Data leaving the user’s physical control

- Greater impact from centralized data breaches

- Complex compliance across multiple jurisdictions

- Limited transparency for end users

Regulators have responded by emphasizing data minimization and tighter controls over data transfers. While cloud AI can meet these requirements, doing so often requires additional governance, monitoring, and legal overhead. These pressures have pushed many organizations to explore alternatives that reduce unnecessary data movement altogether.

What on-device AI and edge AI really mean

On-device AI refers to artificial intelligence systems that run directly on the device where the data is generated. Instead of sending information to remote servers, the AI processes it locally on smartphones, laptops, cameras, vehicles, or industrial sensors.

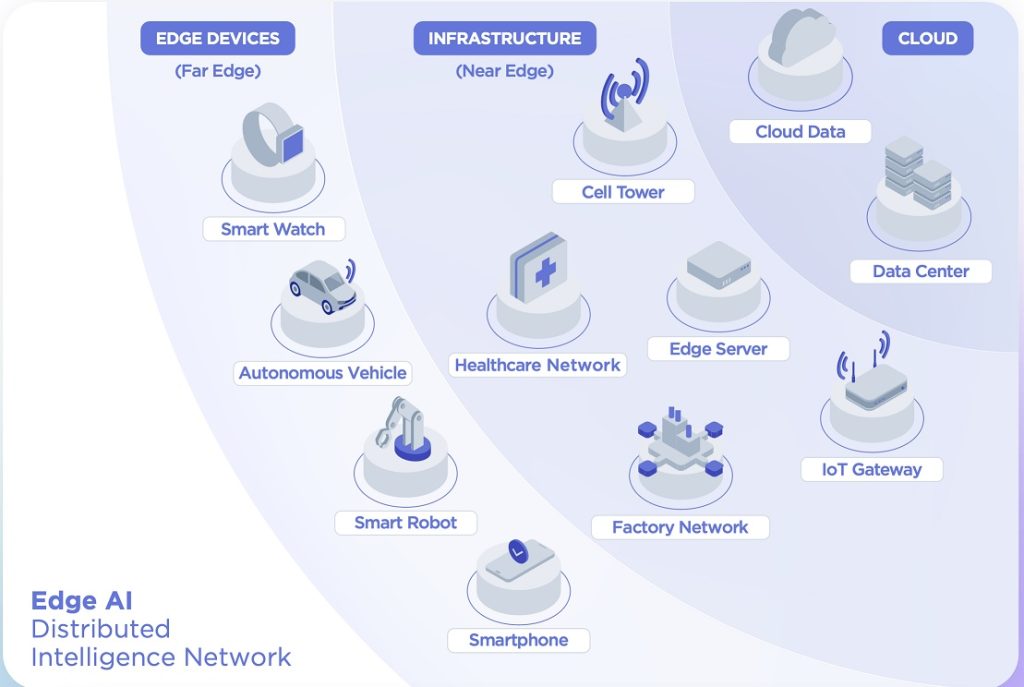

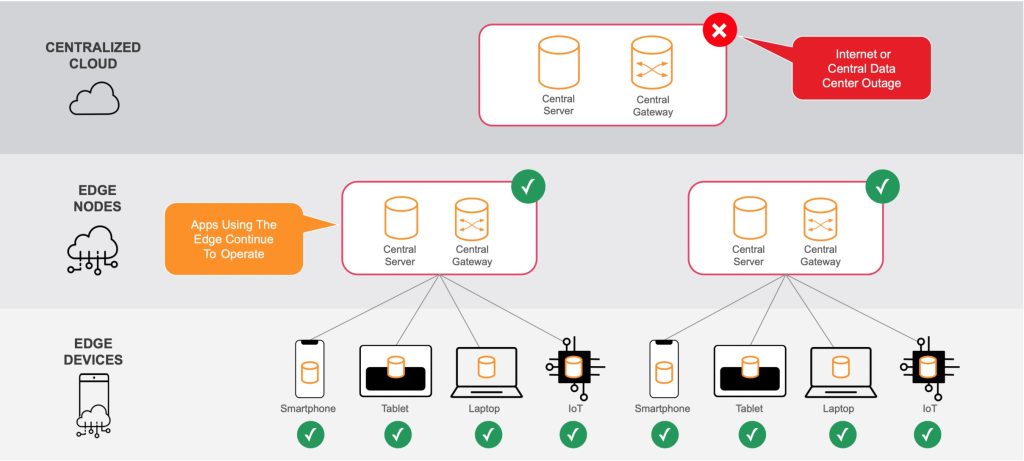

Edge AI is a broader concept that includes on-device AI as well as nearby processing nodes such as local gateways or edge servers. Together, they form the foundation of edge computing and AI, where intelligence is placed closer to the source of data.

This shift fundamentally changes how AI interacts with information. Data no longer needs to travel long distances, and decisions can be made almost instantly. In many cases, data never leaves the device at all.

Common examples of on-device and edge AI include:

- Smartphones performing image recognition locally

- Smart cameras detecting motion without constant cloud uploads

- Vehicles processing sensor data in real time

- Wearables analyzing health metrics on the device

Processing data locally also delivers significant AI privacy benefits. Personal information stays on the device, reducing exposure to interception and large-scale breaches. Smaller centralized datasets lower overall risk and make compliance with data residency requirements easier to demonstrate.

Beyond privacy, proximity is the defining advantage of edge AI. By keeping processing close to the data source, systems become faster, more resilient, and less dependent on constant connectivity. As hardware continues to improve, edge AI is evolving from a limited alternative into a powerful complement to cloud AI.

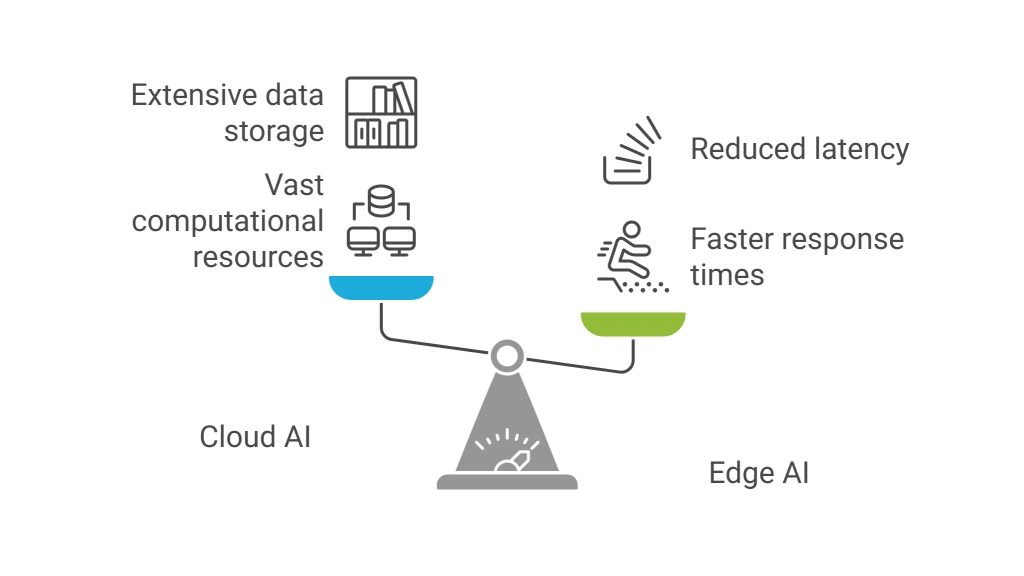

Speed and latency differences between edge AI and cloud AI

Speed is one of the most visible differences between on-device AI and cloud AI. Latency, the delay between an action and a response, can make or break the user experience, especially in real-time applications.

Cloud AI introduces unavoidable delays. Data must travel from the device to the cloud, be processed, and then sent back. Even with fast networks, this round trip adds milliseconds or more, which users can perceive as lag.

Edge AI largely eliminates this delay by processing data locally. Decisions happen almost instantly, without waiting for a network response.

Use cases where low latency matters most include:

- Voice assistants responding to commands

- Image and video analysis

- Augmented and virtual reality experiences

- Industrial automation and robotics

In these scenarios, on-device AI delivers smoother and more reliable performance. It also continues to function when connectivity is weak or unavailable, something cloud AI cannot always guarantee.

Cloud AI still performs well for tasks that are not time-sensitive, such as batch processing or long-running analytics. But for interactive, real-time experiences, the speed advantage of edge computing and AI is difficult to ignore.

Cost comparison between cloud AI and on-device AI

Cost is often the deciding factor when organizations compare on-device AI vs cloud AI, but it is also the most misunderstood. At first glance, cloud AI appears cheaper because it avoids upfront hardware investment. In reality, long-term costs can tell a very different story.

Cloud AI follows a usage-based pricing model. You pay for compute, storage, data transfer, and sometimes even the number of requests made to an AI model. This works well at small scale, but costs can grow quickly as AI adoption increases.

Common cost drivers for cloud AI include:

- High-frequency inference requests

- Continuous data uploads and downloads

- Long-term storage of raw and processed data

- Scaling infrastructure during peak usage

This is why cloud AI cost optimization has become a critical focus for businesses in 2026. What starts as a convenient solution can quietly become one of the largest operational expenses.

On-device AI shifts the cost structure. There is a higher upfront investment in capable hardware, but once deployed, inference happens without recurring cloud fees. For applications with frequent, repetitive AI tasks, this can lead to significant savings over time.

The most cost-effective approach depends on usage patterns. Cloud AI favors flexibility and experimentation, while edge AI favors predictability and scale.

Cloud AI cost optimization strategies

As cloud AI adoption matures, organizations are becoming far more intentional about how they manage spending. Cloud AI cost optimization is no longer just about reducing bills, it’s about building AI systems that scale sustainably.

Many AI workloads are initially designed for convenience rather than efficiency. Over time, this leads to rising costs as usage increases and models are called more frequently.

Effective cloud AI cost optimization strategies include:

- Reducing unnecessary inference requests

- Compressing and optimizing deployed models

- Implementing usage monitoring and limits

- Moving predictable workloads off the cloud

- Caching repeated AI responses where possible

One of the most impactful approaches is shifting real-time inference workloads to the edge while keeping training and orchestration in the cloud. This hybrid edge cloud AI model allows organizations to maintain flexibility without paying cloud prices for every AI interaction.

Cost optimization also encourages better product design. When teams understand the real cost of AI decisions, they build systems that are more efficient by default, benefiting both users and long-term budgets.

The rise of hybrid edge cloud AI

As organizations confront the trade-offs between privacy, speed, and cost, many are discovering that the most practical solution is not choosing one side, but combining both. This is where hybrid edge cloud AI comes into play.

Hybrid architectures split AI workloads based on what each environment does best. The cloud handles heavy tasks such as model training, large-scale analytics, and centralized coordination. Edge devices take care of real-time inference, local decision-making, and privacy-sensitive processing.

This approach offers several advantages:

- Reduced latency for user-facing interactions

- Lower cloud operating costs over time

- Improved AI privacy through local processing

- Continued access to powerful cloud infrastructure

Hybrid edge cloud AI also allows businesses to evolve gradually. Instead of redesigning everything at once, teams can move specific workloads to the edge while keeping existing cloud systems intact.

This flexibility is a major reason why hybrid architectures are shaping AI trends 2026. They provide a path forward that balances innovation with responsibility, without forcing extreme architectural decisions.

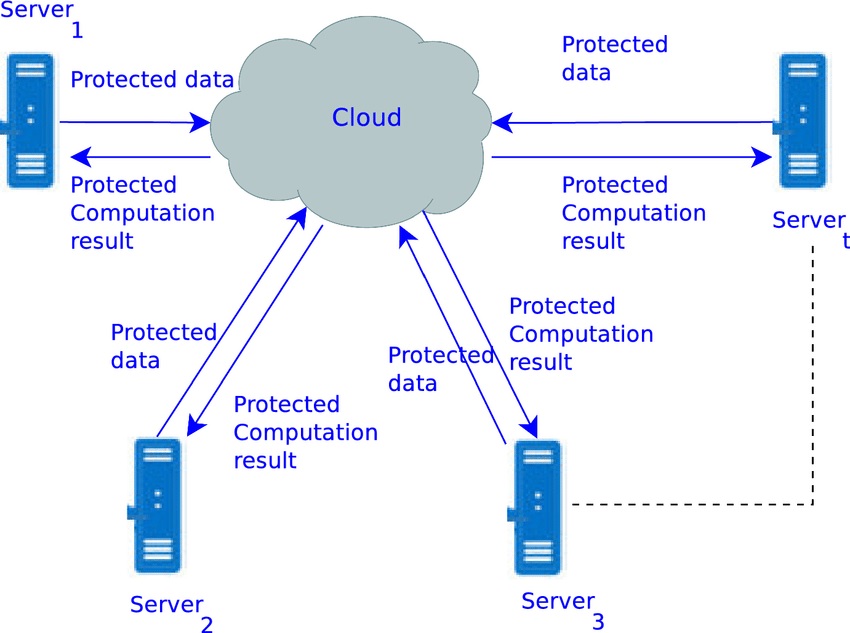

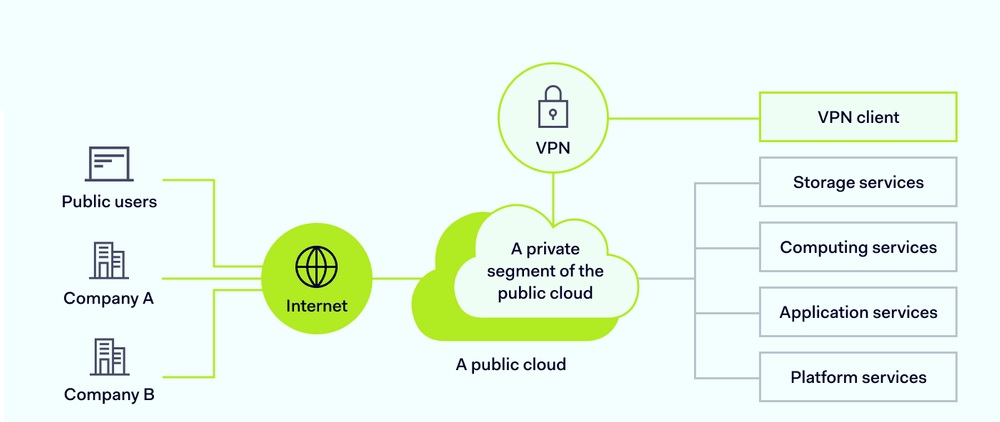

Private cloud compute and private AI compute explained

Not every organization is comfortable relying entirely on public cloud infrastructure. For industries handling highly sensitive data, private cloud compute and private AI compute offer an alternative that balances control with performance.

Private AI environments operate on dedicated infrastructure, either on-premises or hosted in isolated facilities. Unlike public cloud systems, resources are not shared with other customers, giving organizations greater visibility and governance over their data and AI workloads.

Private cloud compute is particularly attractive for organizations that need:

- Strict compliance with data protection regulations

- Full control over data storage and processing

- Custom security policies and access controls

- Predictable performance without shared resource contention

Private AI compute allows these organizations to run advanced AI models while keeping sensitive information within tightly controlled environments. While upfront costs are higher, many enterprises view this as a long-term investment in risk reduction and operational stability.

As privacy regulations tighten and AI becomes more deeply embedded in critical systems, private AI infrastructure is playing a growing role in enterprise AI strategies.

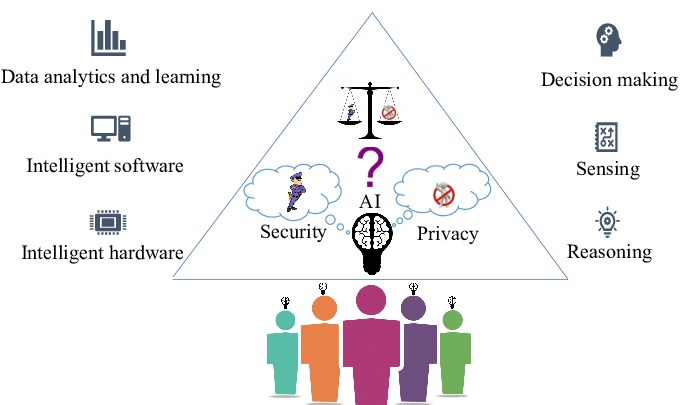

Regulatory pressure and AI security in 2026

By 2026, regulation is no longer a background consideration for AI systems, it is a driving force behind architectural decisions. Governments and regulatory bodies in high-CPC regions are placing greater emphasis on transparency, data minimization, and accountability.

These regulations directly impact how AI systems are designed and where data is processed. Cloud AI, which often involves cross-border data transfers and shared infrastructure, faces increasing scrutiny. Ensuring compliance can require extensive documentation, audits, and ongoing monitoring.

Edge AI aligns more naturally with regulatory expectations because it reduces unnecessary data movement. Processing information locally supports principles such as data minimization and purpose limitation, making compliance easier to demonstrate.

Key regulatory pressures influencing AI architecture include:

- Stricter data residency requirements

- Higher penalties for data breaches

- Greater demand for explainable AI decisions

- Increased user rights over personal data

For many organizations, these pressures are accelerating the adoption of hybrid edge cloud AI and private cloud compute. Security is no longer just about protecting systems, it’s about proving that protection exists.

Final thoughts

The conversation around on-device AI vs cloud AI has evolved from a technical debate into a strategic one. In 2026, decisions about where AI runs directly affect privacy posture, user experience, regulatory compliance, and long-term cost control.

Cloud AI continues to play a critical role, especially for large-scale model training, coordination, and analytics. At the same time, edge AI and on-device AI are proving their value by delivering faster responses, stronger AI privacy, and more predictable operating costs. Neither approach is universally better, but each excels in different situations.

This is why hybrid edge cloud AI is emerging as the most practical path forward. By distributing workloads intelligently, organizations can protect sensitive data, improve performance, and avoid unnecessary cloud expenses. For regulated environments, private cloud compute and private AI compute add an additional layer of control and assurance.

As AI trends 2026 continue to unfold, the most successful AI strategies will be flexible, privacy-aware, and designed for real-world constraints. The future of AI is not about choosing a side, it’s about choosing the right balance.

Frequently asked questions

What is the main difference between on-device AI and cloud AI

The main difference is where data is processed. On-device AI runs directly on the user’s device, while cloud AI sends data to centralized servers for processing.

Is on-device AI better for ai privacy

Yes, on-device AI generally offers stronger AI privacy because data can be processed locally without being transmitted to the cloud.

Does cloud AI still make sense for businesses in 2026

Cloud AI remains essential for tasks like training large models, running complex analytics, and managing AI at scale, especially when combined with edge solutions.

What is hybrid edge cloud AI and why is it important

Hybrid edge cloud AI splits workloads between local devices and the cloud, balancing privacy, speed, and cost while remaining flexible.

When should companies consider private AI compute

Private AI compute is best suited for industries with strict regulatory requirements or highly sensitive data, such as healthcare, finance, and government.